Overview

We’ll walk through a basic RAG example for any project using Python and Flask. By the end of this tutorial you will have a working RAG setup to start experimenting with.

Prerequisites

Repository with the code

If you want to play around with the code with the end result of this blog post, go here:

Setup

Start a new folder for your new project

mkdir rag-application/ && cd rag-application/ && touch main.ipynbWe will use pipenv to make sure the dependencies you install are the same ones needed to run the code.

Create a Pipfile at the root of the folder with the following, this will make sure can install all the dependencies that we need.

[[source]]

url = "https://pypi.org/simple"

verify_ssl = true

name = "pypi"

[packages]

ollama = "*"

pinecone-client = "==2.2.4"

python-dotenv = "==1.0.0"

sentence-transformers = "==2.2.2"

ipykernel = "==6.28.0"

pinecone = "==7.0.0"

[dev-packages]

[requires]

python_version = "3.11"If you don’t have pipenv install click here to install it

Run the following command:

$ pipenv installYou should now see a Pipfile.lock file in your project’s folder if everything ran succesfully

Initializing environment variables

Initialize the pinecone environment variable by copying your pinecone API key into your .env file.

To create the file:

touch .envTo add the key to it:

PINECONE_API_KEY=[INSERT_YOUR_KEY_HERE]First python cell

Then open main.ipynb in your editor. Preferrably you’d use VS Code or Cursor for this since those 2 editors have support for Python Notebooks.

A quick overview of each of the dependencies that we’re using in the first python cell that we will add:

- Pinecone: The Vector database we will use for this example.

- Ollama: The LLM that we will use for this example.

Vector databases store the full sentence vectorized in a tensor, which makes it easy to query the database by comparing how close a given query is to what is stored in the database.

Ollama is an LLM that you can run locally, it is quantized to make it run with limited RAM on a consumer grade laptop.

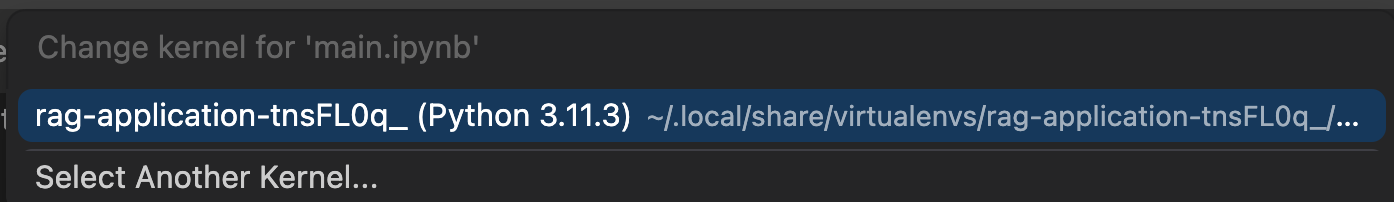

In your python notebook, make sure you pick the correct Python kernel that was created when running pipenv install:

Create a new Python cell with the following:

# Import to interface with the vector database.

from pinecone import Pinecone

# Initialize LLM model.

from ollama import chat

from ollama import ChatResponse

# Initialize dotenv to load environment variables.

from dotenv import load_dotenv

load_dotenv()Creating the Vector Database index

Next, we’ll create the index in Pinecone, this will make sure that you can add rows to the database.

The model that we pass as a parameter to create the index refers to the machine learning model used to generate text embeddings. What this means in simple terms is that in an abstract way it maps your data into a large number of floats that when mapped in multidimentional space, all the records that are related are close to each other.

Create a new Python cell with the following:

pinecone_api_key = os.getenv('PINECONE_API_KEY')

pc = Pinecone(api_key=pinecone_api_key)

index_name = "rag-example"

if not pc.has_index(index_name):

pc.create_index_for_model(

name=index_name,

cloud="aws",

region="us-east-1",

embed={

"model": "llama-text-embed-v2",

"field_map": {"text": "chunk_text"}

}

)Reading the dataset and uploading it to the vector database

Download this file below and place it in the project’s root folder:

Create batches in memory for the data that we want to upload. We are doing this to prepare for when we upload the records to Pinecone, because there is a rate limit when creating records in Pinecone with a basic account.

import json

records_batches = {}

with open('./reddit_jokes.json', 'r') as file:

json_reader = json.load(file)

i = 0

batch = 0

for joke in json_reader:

if i % 50 == 0 and i != 0:

batch += 1

records_batches.setdefault(batch, [])

i += 1

full_joke = joke["title"] + '\n\n' + joke["body"]

records_batches[batch].append({

"_id": f"rec{i}",

"chunk_text": full_joke

})

print(f"Number of joke record batches: {len(records_batches.keys())}")Finally, we can upload the record using the batches we just created, this step will take a few minutes to complete.

import time

# Retrieve the connection to the index

dense_index = pc.Index(index_name)

pinecone_index_namespace = "reddit_jokes_namespace"

# Insert records in batches

for batch_index, batch_data in records_batches.items():

dense_index.upsert_records(pinecone_index_namespace, batch_data)

time.sleep(10)

# Print index's stats to confirm that the records were created:

stats = dense_index.describe_index_stats()

print(stats)Putting it all together with an LLM

Setup the RAG system and put it all together with an LLM, Ollama in this case

pinecone_index_namespace = "reddit_jokes_namespace"

query = "Share with me the best jokes about dogs."

results = dense_index.search(

namespace=pinecone_index_namespace,

query={

"top_k": 3,

"inputs": {

'text': query

}

}

)

augmentation_data = []

print(f"Got {len(results['result']['hits'])} results from the Vector Database")

for hit in results['result']['hits']:

augmentation_data.append(hit['fields']['chunk_text'])

separator = '\n\n\n====================================\n\n\n'

augmentation_data = separator.join(augmentation_data)

response: ChatResponse = chat(model='llama3.2', messages=[

{

'role': 'system',

'content': 'You are a helpful assistant',

},

{

'role': 'system',

'content': """

The user's question is:

{query}

Aproach this task step-by-step, take your time and do not skip steps.

Based on the user's question and using the following data as input:

{augmentation_data}

1. Read each paragraph from the previous augmentation text.

2. Create a response that summarizes ONLY the text relevant to the user's query.

3. Create a message so the user can laugh at the jokes found in the data found.

"""

},

{

'role': 'user',

'content': query,

}

])

print(response['message']['content'])Conclusion

And that’s it! Now you have a working RAG setup, from here you can go in multiple directions. RAG has become a fundamental part of how Product and Engineering teams get value out of LLMs in today’s age.

If your team needs help with RAG or anything related to LLMs or Machine learning, checkout my Consulting page!